AI Content Generator - n8n automating ASMR video creation

Required API keys and settings

Main services:

- OpenAI API key - for generating ideas and scenarios (2 models) https://platform.openai.com/docs/overview

- Fal.ai API key - for access to seedance, MMaudio-v2, FFmpeg API https://fal.ai/dashboard/keys

- Google Sheets OAuth2 - to save intermediate data and results

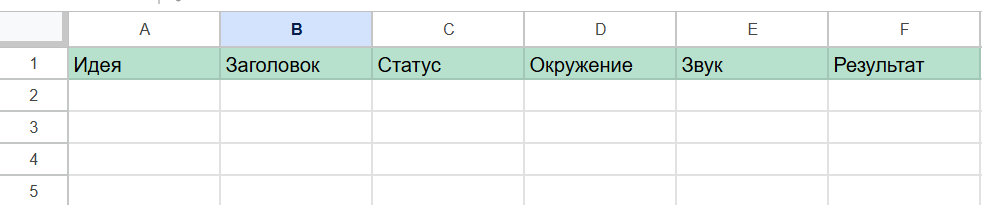

Google Sheets structure:

Create a table with columns:

- The idea - a brief description of the action (key field for update)

- headline - viral headline with hashtags

- Surrounding - description of the shooting environment

- Sound - description of ASMR sounds

- Status - production status

- Result - The URL of the finished video

Setting up Fal.ai:

All the necessary models are available in one API key:

- Bytedance/Seedance - to generate videos

- MMaudio-v2 - for creating ASMR sounds

- FFmpeg API - for editing and compositing

Work features

Asynchronous processing:

- Video generation: 4 minutes of waiting (complex ASMR scenes)

- Creating audio: 1 minute of waiting (sync with video)

- Installation: 1 minute of waiting (compositing and coding)

Content quality:

- Detailed prompts: 1000-2000 characters per stage

- Cinematic shooting: macro details, professional lighting

- ASMR focus: focus on tactile and sound sensations

- Viral potential: trending hashtags and emotional headlines

Structuring:

- 3-6 scenes on video depending on complexity

- 30 seconds total duration (10 seconds per stage)

- Logical progression: from the start of cutting to the final result

Process tracking:

- All steps are logged to Google Sheets

- You can track the idea, title, and the finished video

- The history of all videos created by ASMR

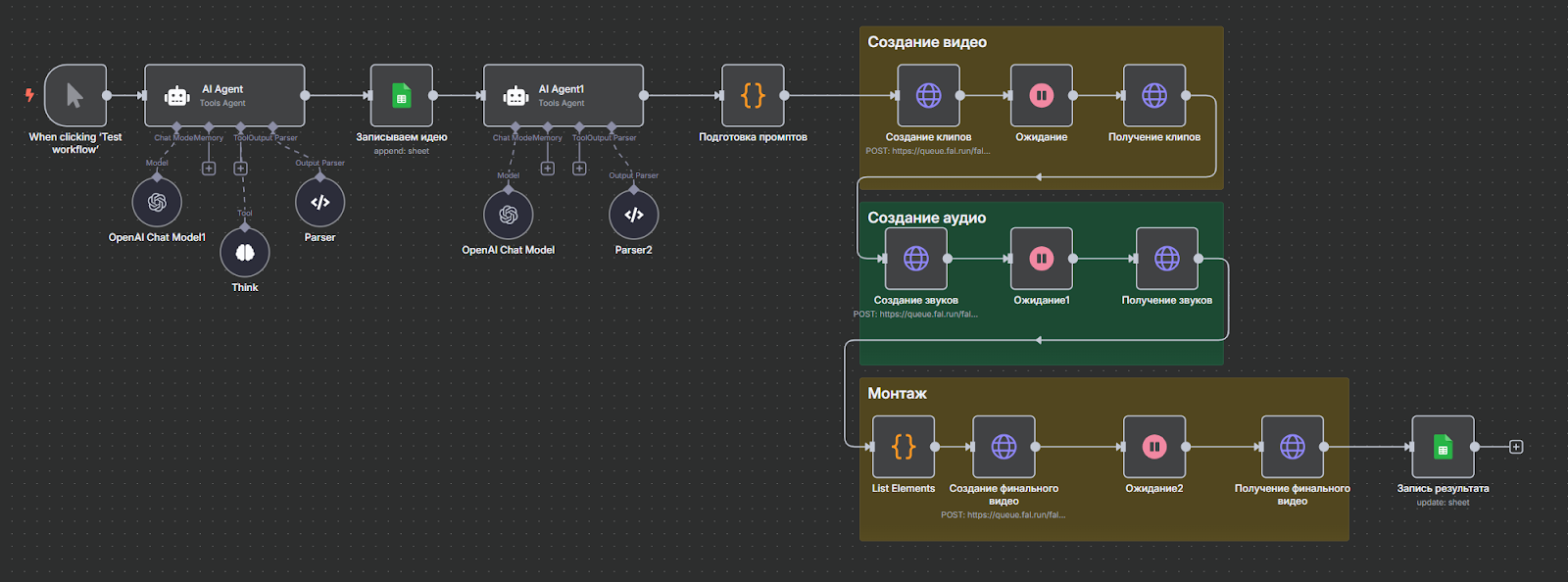

Process description

This automation is a full-fledged factory for creating viral ASMR content. The system automatically generates ideas for videos about cutting various materials with a sharp knife, creates detailed scenarios from 3-6 scenes, generates videos using the ByteDance - seedance AI model, adds appropriate ASMR sounds and edits the final video with a duration of 30 seconds.

Detailed automation architecture

STAGE 1: LAUNCH AND GENERATE AN IDEA

1.1 Manual launch - When clicking 'Test workflow' (Replace with your own tasks)

Purpose: Entry point for starting content production

Settings:

- Type: Manual Trigger

- Purpose: Starts the entire ASMR video creation pipeline

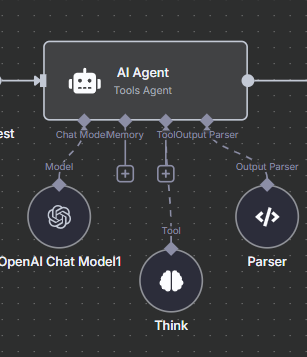

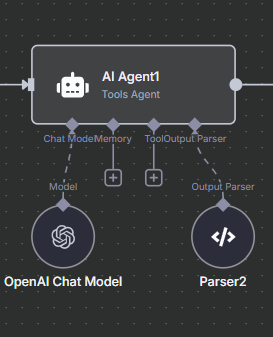

1.2 Idea Generation — AI Agent

Purpose: AI creates a viral idea for an ASMR video about cutting materials

Connected components:

- OpenAI Chat Model1 (gpt-4o-mini)

- Parser (Structured Output Parser)

- Think (self-test tool)

Main prompt:

Give me an idea about

[[

a random hard material or element being sliced with a sharp knife - have your idea be in this format: “(color) (material) shaped like a (random object)”. Examples for your inspiration: glass shaped like a strawberry, crystal shaped like a bear, dry ice shaped like a snowflake, diamond shaped like a hat, etc.

]]

Use the Think tool to review your output

System prompt:

Role: You are an AI designed to generate 1 immersive, realistic idea based on a user-provided topic. Your output must be formatted as a JSON array (single line) and follow all the rules below exactly.

RULES:

Only return 1 idea at a time.

The user will provide a key topic (e.g. “glass cutting ASMR,” “wood carving sounds,” “satisfying rock splits”).

The Idea must:

- Be under 13 words.

- Describe an interesting and viral-worthy moment, action, or event related to the provided topic.

- Can be as surreal as you can get, doesn't have to be real-world!

The Caption must be:

- Short, punchy, and viral-friendly.

- Include one relevant emoji.

- Include exactly 12 hashtags in this order:

** 4 topic-relevant hashtags

** 4 all-time most popular hashtags

** 4 currently trending hashtags (based on live research)

- All hashtags must be lower.

- Set Status to “for production” (always).

The Environment must:

- Be under 20 words.

- Match the action in the Idea exactly.

- Clearly describe:

Where the event is happening (e.g. clean studio table, rough natural terrain, laboratory bench)

Key visuals or background details (e.g. dust particles, polished surface, subtle light reflections)

Style of scene (e.g. macro close-up, cinematic slow-motion, minimalist, abstract)

- Ok with fictional settings.

The Sound must:

- Be under 15 words.

- Describe the primary sound that makes sense to happen in the video. This will be fed to a sound model later on.

OUTPUT FORMAT (single-line JSON array):

[

{

“Caption”: “Short viral title with emoji #4_topic_hashtags #4_all_time_popular_hashtags #4_trending_hashtags “,

“Idea”: “Short idea under 13 words”,

“Environment”: “Brief vivid setting under 20 words matching the action”,

“Sound”: “Primary sound description under 15 words”,

“Status”: “for production”

}

]

Structured Output Parser Schema “Parser”:

[

{

“Caption”: “Diver Removes Nets Off Whale 🐋 #whalerescue #marinelife #oceanrescue #seahelpers #love #nature #instagood #explore #viral #savenature #oceanguardians #cleanoceans”,

“Idea”: “Diver carefully cuts tangled net from distressed whale in open sea”,

“Environment”: “Open ocean, sunlight beams through water, diver and whale, cinematic realism”,

“Sound”: “Primary sound description under 15 words”,

“Status”: “for production”

}

]

WHAT IS Structured Output Parser Schema: This is a tool that forces AI to return data in a precisely defined JSON array format. Without it, AI could return an object or text in any form.

WHY IS IT NEEDED HERE:

- Ensures that all 5 fields are received: Caption, Idea, Environment, Sound, Status

- Provides an array format for compatibility with Google Sheets

- Allows you to access data via $json.output [0] .Idea

- Eliminates parsing errors when transferring data further

HOW IT WORKS:

- AI receives a schema and is obliged to return a JSON array with an object inside

- Each field has strict length and content restrictions

- The following nodes can accurately extract the data you need

Think Tool: A special AI self-test tool that allows the model to analyze its response before making a conclusion.

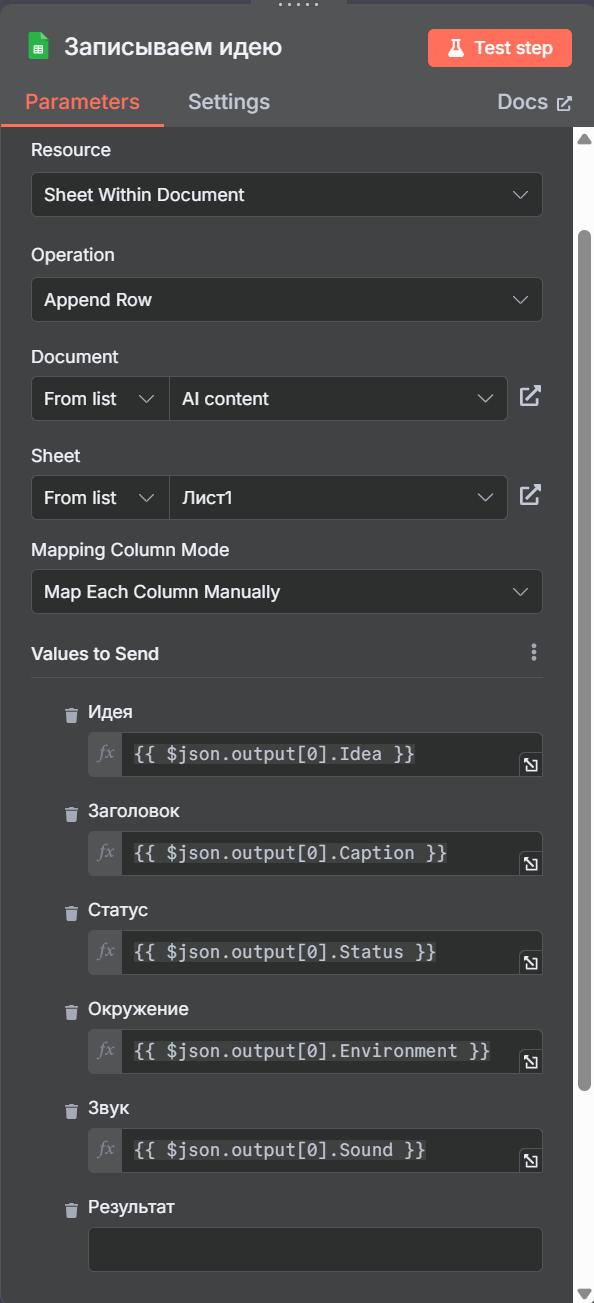

STAGE 2: SAVING THE IDEA

2.1 Saving to a table - Writing down the idea

Purpose: Saves the generated idea to Google Sheets for tracking

Google Sheets settings:

- Operation: Append

- Document ID: 19-XVSVMGQB_6OAGGH-PV3OTMJ_M5M9EDLVKW-CD_ZJ4

- Sheet Name: Sheet1

- Columns Mapping:

- Idea: {{$json.output [0] .Idea}}

- Title: {{$json.output [0] .Caption}}

- Environment: {{$json.output [0] .Environment}}

- Sound: {{$json.output [0] .Sound}}

- Status: {{$json.output [0] .Status}}

Table structure:

The idea

headline

Surrounding

Sound

Status

Result

[AI idea]

[Caption+ hashtags]

[Environment description]

[ASMR sound]

for production

[empty]

STEP 3: CREATING DETAILED SCENARIOS

3.1 Script Generation — AI Agent1

Purpose: AI creates 3-6 detailed scenes for videos based on an idea

Connected components:

- OpenAI Chat Model (gpt-4o-mini)

- Parser2 (Structured Output Parser)

Main prompt:

Give me 3 video scenes prompts based on the idea

System prompt:

Role: You are a prompt-generation AI specializing in cinematic, ASMR-style video prompts. Your task is to generate a multi-scene video sequence that clearly shows a sharp knife actively cutting through a specific object in a clean, high-detail setting.

Your writing must follow this style:

- Sharp, precise cinematic realism.

- Macro-level detail with tight focus on the blade interacting with the object.

- The knife must always be in motion — slicing, splitting, or gliding through the material. Never idle or static.

- Camera terms are allowed (e.g. macro view, tight angle, over-the-blade shot).

Each scene must contain all of the following, expressed through detailed visual language:

- The main object or subject (from the Idea)

- The cutting environment or surface (from the Environment)

- The texture, structure, and behavior of the material as it's being cut

- A visible, sharp blade actively cutting

Descriptions should show:

- The physical makeup of the material - is it translucent, brittle, dense, reflective, granular, fibrous, layered, or fluid-filled?

- How the material responds to the blade - resistance, cracking, tearing, smooth separation, tension, vibration.

- The interaction between the blade and the surface - light reflection, buildup of particles, contact points, residue or dust.

- Any Asmr-relevant sensory cues like particle release, shimmer, or subtle movement, but always shown visually - not narrated.

Tone:

- Clean, clinical, visual.

- No poetic metaphors, emotion, or storytelling.

- Avoid fantasy or surreal imagery.

- All description must feel physically grounded and logically accurate.

Length:

- Each scene must be between 1,000 and 2,000 characters.

- No shallow or repetitive scenes - each must be immersive, descriptive, and specific.

- Each scene should explore a distinct phase of the cutting process, a different camera perspective, or a new behavior of the material under the blade.

Inputs:

Idea: “{{$json ['Idea']}}”

Environment: “{{$json ['Environment']}}”

Sound: “{{$json ['Sound']}}”

Format:

Idea: “...”

Environment: “...”

Sound: “...”

Scene 1: “...”

Scene 2: “...”

Scene 3: “...”

Scene 4: “...”

Scene 5: “...”

Scene 6: “...”

Structured Output Parser Schema “Parser2":

{

“Idea”: “An obsidian rock being sliced with a shimmering knife”,

“Environment”: “Clean studio table, subtle light reflections”,

“Sound”: “Crisp slicing, deep grinding, and delicate crumbling”,

“Scene 1": “Extreme macro shot: a razor-sharp, polished knife blade presses into the dark, granular surface of an obsidian rock, just beginning to indent. “,

“Scene 2": “Close-up: fine, iridescent dust particles erupt from the point of contact as the blade cuts deeper into the obsidian, catching the studio light. “,

“Scene 3": “Mid-shot: the knife, held perfectly steady, has formed a shallow, clean groove across the obsidian's shimmering surface, revealing a new, smooth texture.”

}

WHAT IS Structured Output Parser Schema: Here, the scheme provides structured scenarios in object format with separate fields for each scene.

WHY IS IT NEEDED HERE:

- Splits scenarios into separate fields: Scene 1, Scene 2, Scene 3, etc.

- Allows you to extract each scene separately to create videos

- Ensures that you will receive from 3 to 6 scenes (depending on complexity)

- Preserves the original data (Idea, Environment, Sound) for the context

HOW IT WORKS:

- AI receives the scheme and is obliged to return the object with the fields Scene 1, Scene 2, etc.

- Each scene contains 1000-2000 characters of detailed description

- The next node can extract all scenes to create prompts

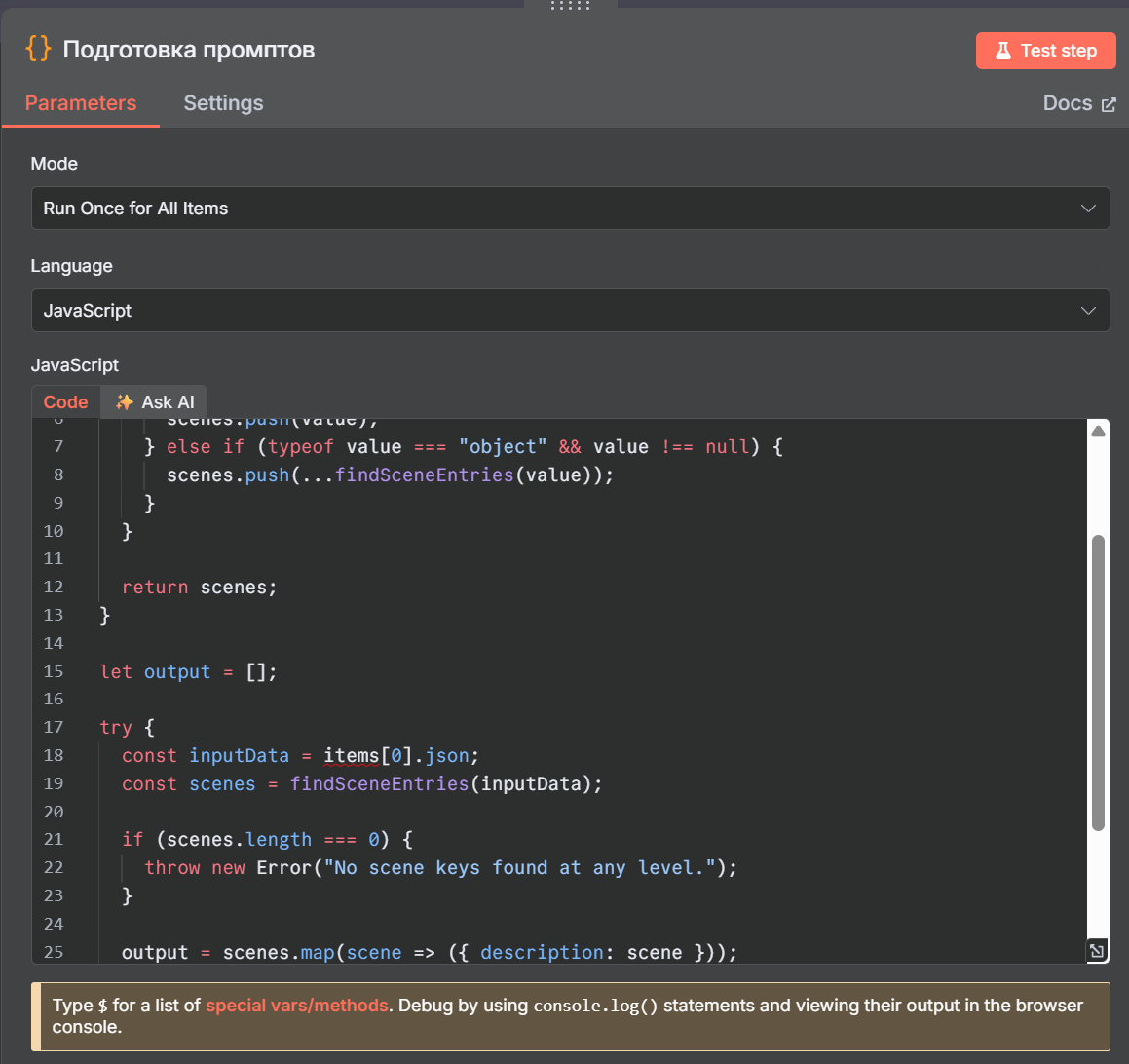

3.2 Preparing Prompts - Preparing Prompts

Purpose: JavaScript extracts all scenes from the AI response and prepares them for video generation

JavaScript code:

function FindSceneEntries (obj) {

const scenes = [];

for (const [key, value] of Object.entries (obj)) {

if (Key.toLowerCase () .startsWith (“scene”) && typeof value === “string”) {

scenes.push (value);

} else if (typeof value === “object” && value! == null) {

scenes.push (... findSceneEntries (value));

}

}

return scenes;

}

let output = [];

try {

const InputData = items [0] .json;

const scenes = FindSceneEntries (InputData);

if (scenes.length === 0) {

throw new Error (“No scene keys found at any level. “);

}

output = scenes.map (scene => ({description: scene}));

} catch (e) {

throw new Error (“Could not extract scenes properly. Details: "+ e.message);

}

return output;

What's going on:

- Scene search: Recursively searches for all keys starting with “scene”

- Extraction: Collects all found scenes into an array

- Formatting: Creates objects with a description field for each scene

- Verification: Gives an error if no scenes are found

The result: An array of objects like [{description: “Scene 1 text"}, {description: “Scene 2 text"},...]

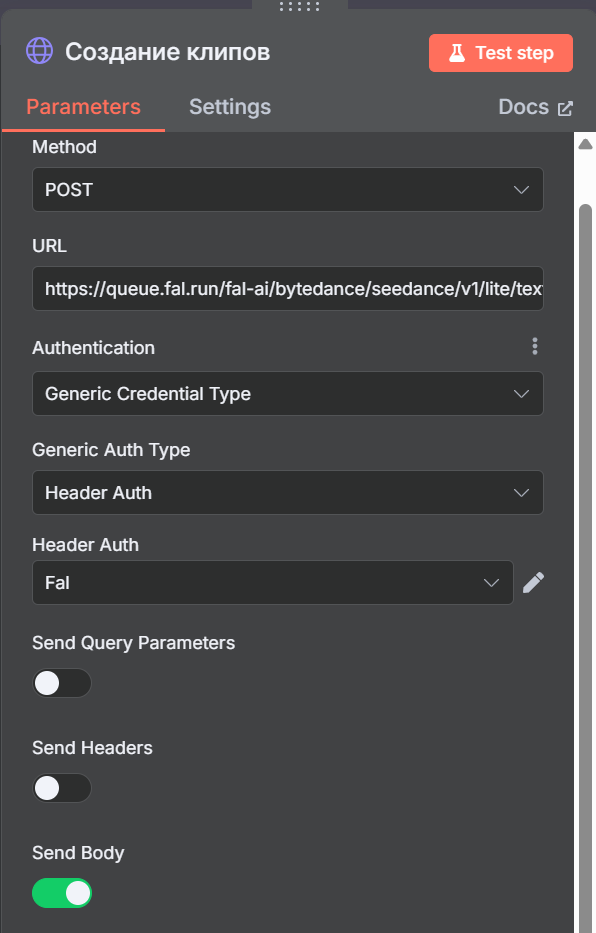

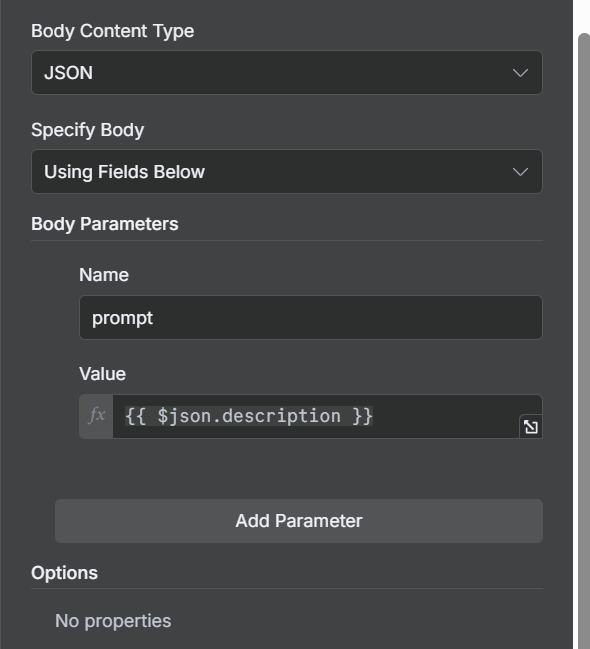

STEP 4: CREATE VIDEO CLIPS

4.1 Generating videos — Creating clips

Purpose: Creates video clips for every scene via ByteDance AI

HTTP Request settings:

- Method: POST

- URL: https://queue.fal.run/fal-ai/bytedance/seedance/v1/lite/text-to-video

- Authentication: Fal API key

- Body Parameters:

- prompt: {{$json.description}}

What's going on:

- Each scene is sent separately to Bytedance/Seedance

- The model creates a 10-second video clip based on a detailed prompt

- Request_id is returned to retrieve the result asynchronously

The result:

{

“status”: “IN_QUEUE”,

“request_id”: “unique-id-123",

“response_url”:” ... “,

“status_url”: “...”

}

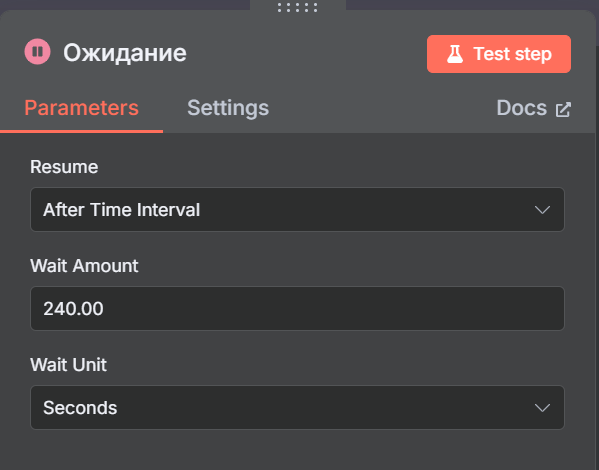

4.2 Waiting for Generation — Waiting

Purpose: Pause to generate videos

Settings:

- Amount: 240 seconds (4 minutes)

- Why: ByteDance - Seedance takes time to generate high-quality videos

4.3 Getting videos - Getting clips

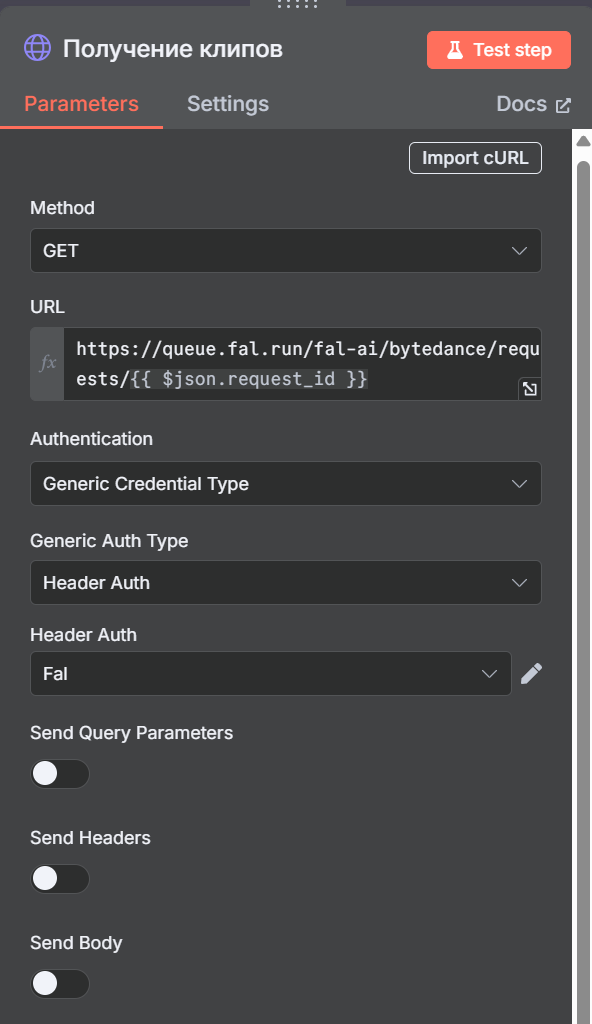

Purpose: Downloads ready-made video clips

HTTP Request settings:

- Method: GET

- URL: https://queue.fal.run/fal-ai/bytedance/requests/ {{$json.request_id}}

- Authentication: Fal API key

The result:

{

“video”: {

“url”: "https://fal.media/files/video123.mp4 “,

“content_type”: “video/mp4",

“file_name”: "output.mp4 “,

“file_size”: 2500000

}

}

STEP 5: CREATE AUDIO

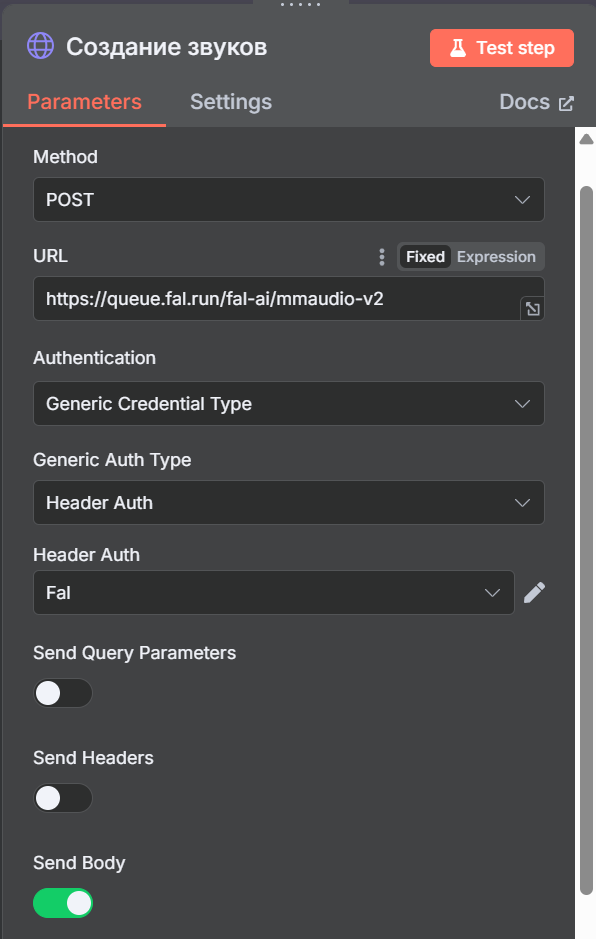

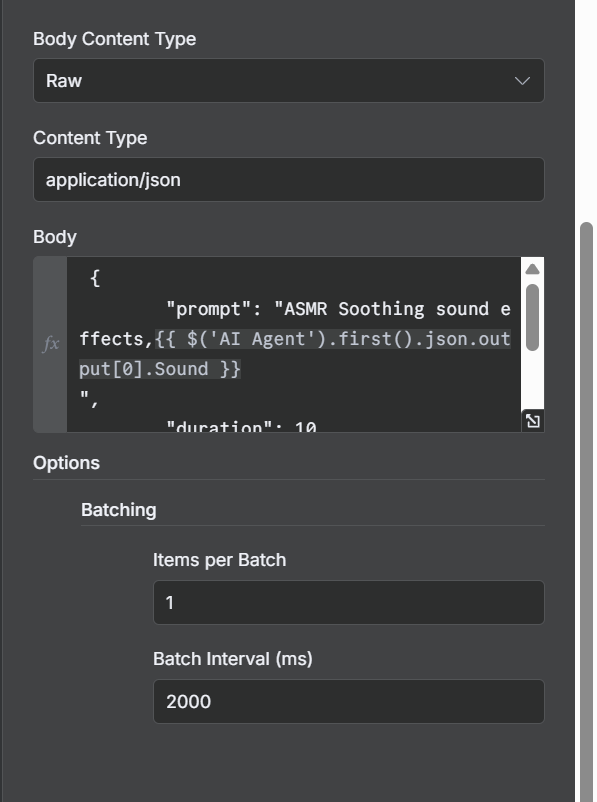

5.1 Generating sounds - Creating sounds

Purpose: Generates ASMR sounds for video via MMaudio-v2

HTTP Request settings:

- Method: POST

- URL: https://queue.fal.run/fal-ai/mmaudio-v2

- Authentication: Fal API key

- Content-Type: application/json

JSON Body:

{

“prompt”: “ASMR Soothing sound effects, {{$ ('AI Agent') .first () .json.output [0] .Sound}}”,

“duration”: 10,

“video_url”: “{{$json.video.url}}”

}

Parameters:

- prompt: Combines “ASMR Soothing sound effects” + sound description from the first stage

- duration: 10 seconds (equals video length)

- video_url: The URL of the first video clip to sync

Batching settings:

- Batch Size: 1 (one request at a time)

- Batch Interval: 2000ms (pause between requests)

5.2 Waiting for audio - Waiting1

Purpose: Pause to generate sound

Settings:

- Amount: 60 seconds

- Why: MMAudio takes time to create high-quality ASMR sound

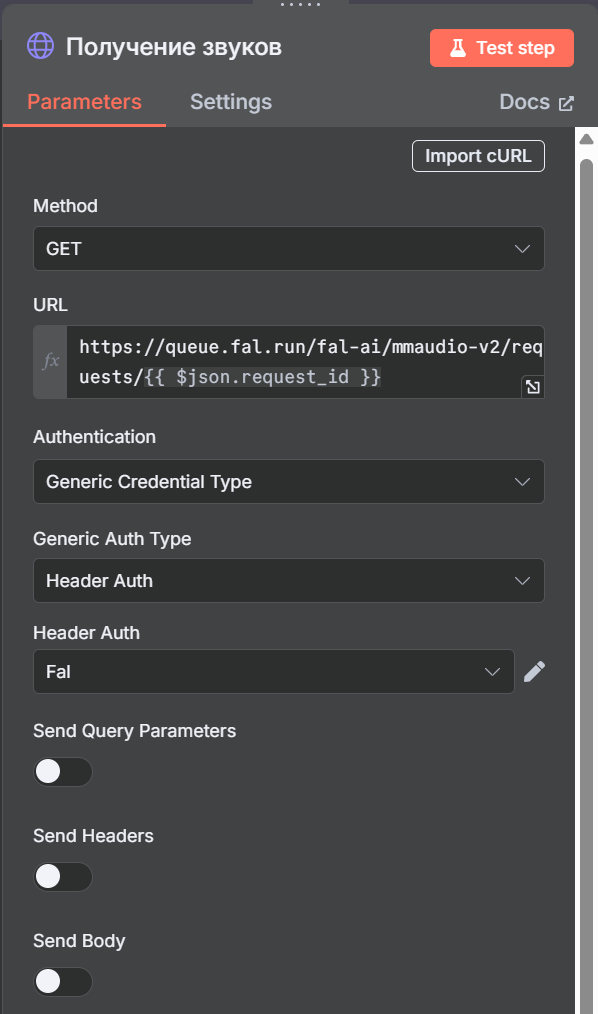

5.3 Receiving sounds - Receiving sounds

Purpose: Downloads ready-made audio files

HTTP Request settings:

- Method: GET

- URL: https://queue.fal.run/fal-ai/mmaudio-v2/requests/ {{$json.request_id}}

- Authentication: Fal API key

STEP 6: EDITING THE FINAL VIDEO

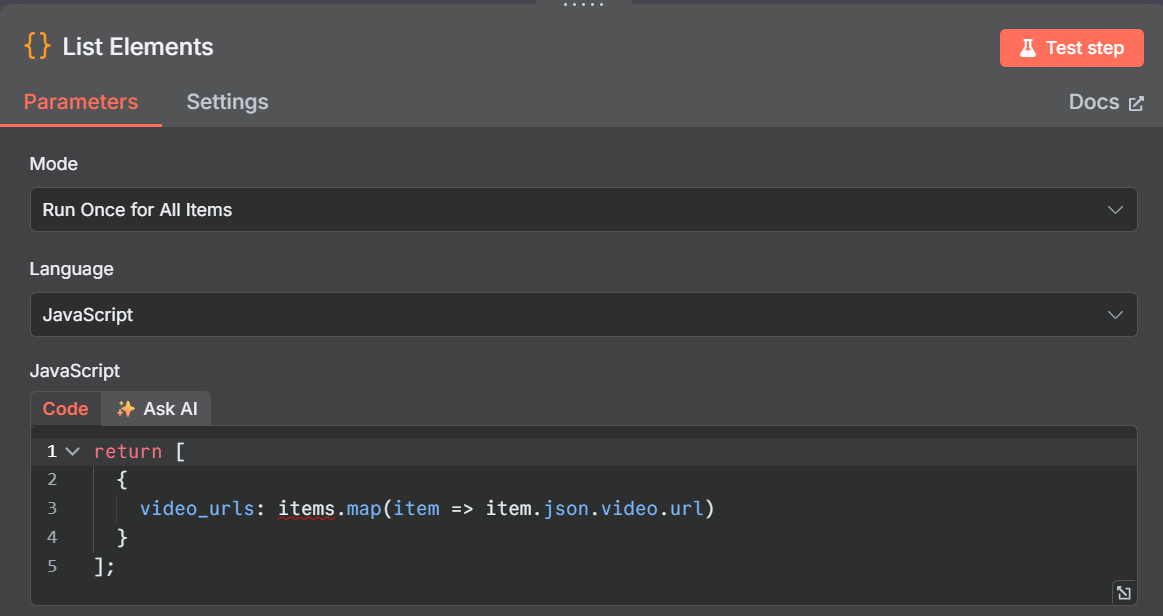

6.1 Preparing URLs - List Elements

Purpose: Collects the URLs of all video clips into one array

JavaScript code:

return [

{

video_urls: items.map (item => item.json.video.url)

}

];

What's going on:

- Gets all items from “Receiving sounds”

- Extracts the video URL from each element

- Creates a video_urls array for editing

The result: {video_urls: [” url1.mp4 “," url2.mp4 “," url3.mp4 “]}

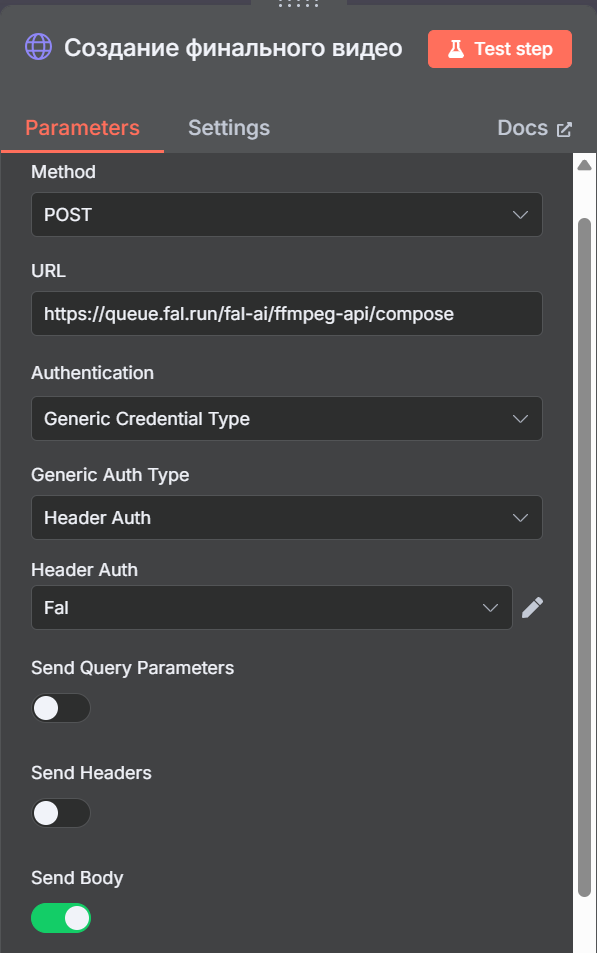

6.2 Creating the final video - Creating the final video

Purpose: Edits all clips into one 30-second video via FFmpeg

HTTP Request settings:

- Method: POST

- URL: https://queue.fal.run/fal-ai/ffmpeg-api/compose

- Authentication: Fal API key

- Content-Type: application/json

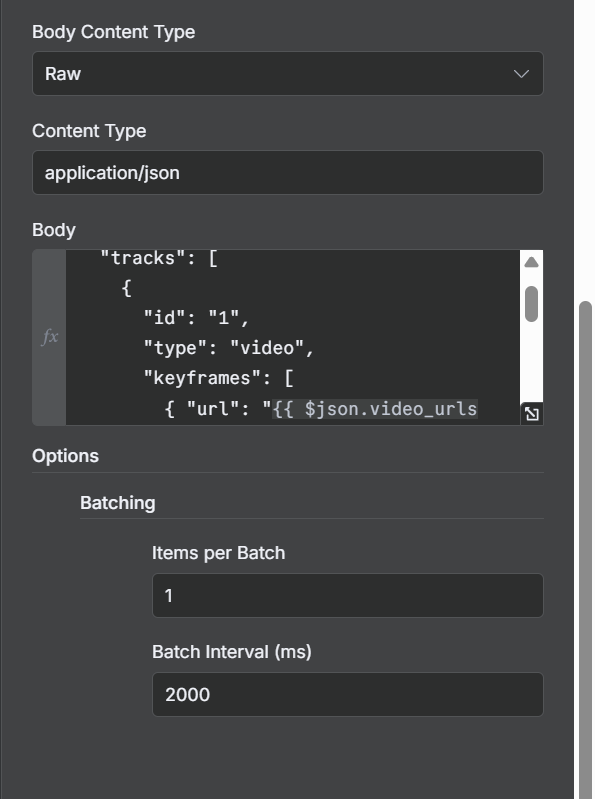

JSON Body:

{

“tracks”: [

{

“id”: “1",

“type”: “video”,

“keyframes”: [

{“url”: “{{$json.video_urls [0]}}”, “timestamp”: 0, “duration”: 10},

{“url”: “{{$json.video_urls [1]}}”, “timestamp”: 10, “duration”: 10},

{“url”: “{{$json.video_urls [2]}}”, “timestamp”: 20, “duration”: 10}

]

}

]

}

Installation structure:

- Track 1: Video track

- Keyframe 1: Scene 1 (0-10 seconds)

- Keyframe 2: Scene 2 (10-20 seconds)

- Keyframe 3: Scene 3 (20-30 seconds)

Batching settings:

- Batch Size: 1

- Batch Interval: 2000ms

6.3 Waiting for editing - Waiting 2

Purpose: Pause for video processing

Settings:

- Amount: 60 seconds

- Why: FFmpeg takes time to edit and code

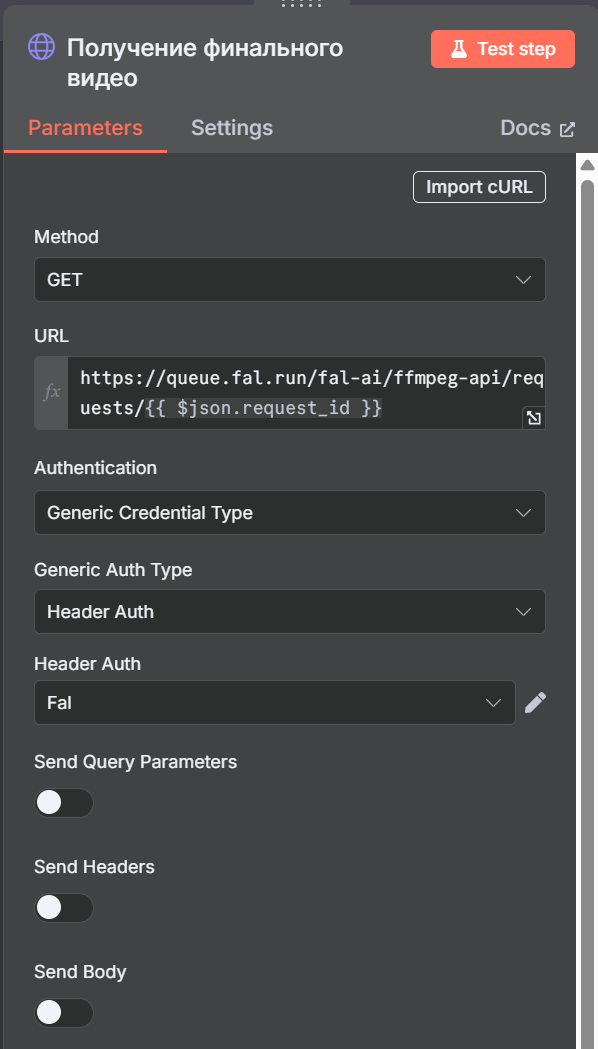

6.4 Getting the result - Getting the final video

Purpose: Downloads the finished edited video

HTTP Request settings:

- Method: GET

- URL: https://queue.fal.run/fal-ai/ffmpeg-api/requests/ {{$json.request_id}}

- Authentication: Fal API key

The result:

{

“video_url”: "https://fal.media/files/final_video.mp4 “,

“duration”: 30,

“format”: “mp4"

}

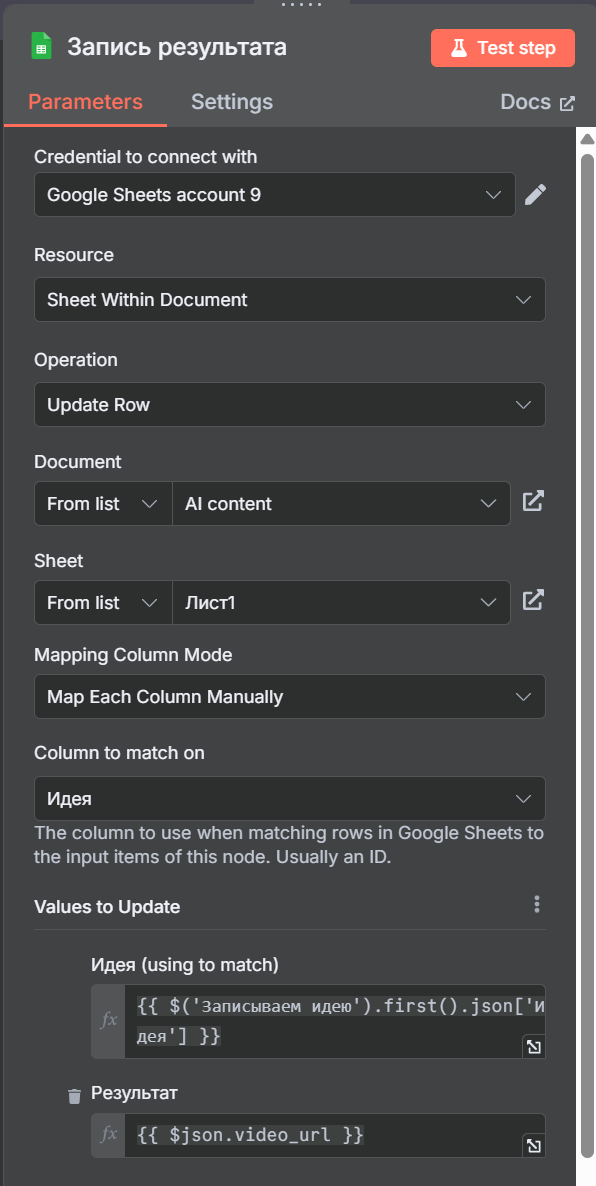

STEP 7: SAVE THE RESULT

7.1 Update the table - Record the result

Purpose: Updates Google Sheets with the URL of the finished video

Google Sheets settings:

- Operation: Update

- Matching Column: The idea

- Columns Mapping:

- Result: {{$json.video_url}}

- Idea: {{$ ('Writing down an idea') .first () .json ['Idea']}}

The result is in the table:

The idea

headline

Surrounding

Sound

Status

Result

[AI idea]

[Caption+ hashtags]

[Environment description]

[ASMR sound]

for production

[Video URL]

Node connection diagram

Main stream:

- When clicking 'Test workflow' → AI Agent

- AI Agent → Writing down an idea

- Writing down an idea → AI Agent1

- AI Agent1 → Preparing proposals

Video creation (in parallel for each scene): 5. Preparing proposals → Creating clips 6. Creating clips → Expectation (240s) 7. Expectation → Retrieving clips

Creating audio: 8. Retrieving clips → Creating sounds 9. Creating sounds → Expectation1 (60s) 10. Expectation1 → Receiving sounds

Installation: 11. Receiving sounds → List Elements 12. List Elements → Creating the final video 13. Creating the final video → Expectation2 (60s) 14. Expectation2 → Getting the final video 15. Getting the final video → Recording the result

AI Connections:

- OpenAI Chat Model1 (gpt-4o-mini) → AI Agent (idea generation)

- OpenAI Chat Model (gpt-4o-mini) → AI Agent1 (scripting)

- Think → AI Agent (self-test)

- Parser → AI Agent (structured idea conclusion)

- Parser2 → AI Agent1 (structured scenario output)

The result of automation

What happens is:

- 30-second ASMR video with 3 scenes of material cutting

- Cinematic quality

- Synchronized ASMR audio by MMaudio-v2

- Viral headline with 12 trending hashtags

- Installation via FFmpeg

Quality characteristics:

- Duration: Exactly 30 seconds

- Sound: Stereo ASMR audio synchronized with video

- Format: MP4 for maximum compatibility

Application:

- ASMR channels - automatic content creation

- Social media - viral videos for TikTok, Instagram

- Relaxation content - meditation and relaxation videos

- Educational channels - demonstration of material properties

Scaling:

- Can be run multiple times for different materials

- Easy to adapt to other ASMR niches

- Add more scenes for long videos

- Integrate with publishing systems

It's done. This automation turns a simple idea into a ready-made professional ASMR video in 7-10 minutes!