n8n automates the collection and publication of relevant news posts and YouTube videos on specific topics

Goal:

Automate the collection of relevant news and videos on selected topics by creating and publishing a personalized content feed to your mini-site.

Scope of application:

- Personal digests (bloggers, researchers)

- Business intelligence (tracking niche trends)

- Media resources (website autocontent)

- Education (training kits)

🟩 1. Initialization and data sources

- When Clicking 'Test workflow' — manual start of the process.

- YouTube Users (Set) — A list of YouTube channels that you need to follow.

- Queries (Set) — search query list: top ai news, ai models, ai tools, ai prompting. (You can give yourself others)

🟦 2. Receiving news through Tavily

- Split Out1 → Loop Over Items3 — breaks down the list of requests and processes each one.

- Tavily — API request to Tavily for every request to get the latest news.

- OpenAI — LLM processes the news received.

- Basic LLM Chain — rewrites news in an easy way: short headline+engaging text.

- Parser — brings the answer into the required JSON format.

- Supabase — saves the finished news to the news table with the News type.

🟨 3. Getting YouTube videos

- Split Out → Loop Over Items1 → Get Channel ID — gets the ID of each channel by username.

- Set → Loop Over Items2 → Get 1 Video — gets the latest videos from these channels.

- Split Items Again → Set VideoID — extracts VideoID.

🟧 4. YouTube video processing

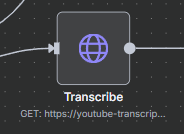

- Loop Over Items → Transcribe — receives a video transcript via RapidAPI.

- Wait — delay (in case of rate-limit).

- Summarization Chain (GPT-4o) — makes a summary of the video.

- Basic LLM Chain1 (OpenAI) — rewrites it to the engaging format.

- Parserx — structures data.

- Supabase1 — saves to news with the YouTube type.

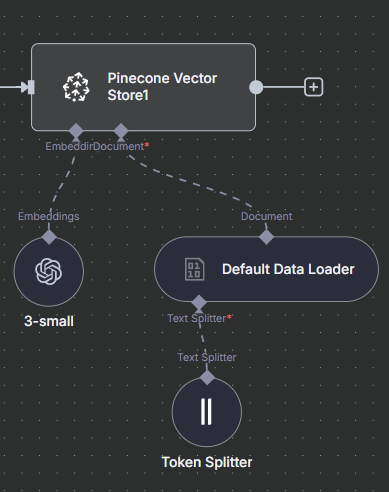

🟪 5. Indexing in Pinecone

- Token Splitter → Default Data Loader → Pinecone Vector Store1/2 — vectorizes and saves texts in Pinecone (separately for articles and videos).

- OpenAI Embeddings — used for embedding.

🟫 6. Chatbot interface

- Webhook — an incoming request from a user (for example, on Telegram/Vercel).

- AI Agent — prompt agent: searches Pinecone based on a request.

- 4o-minix — the model that serves this agent.

- Pinecone Vector Store — answers the question by extracting relevant content.

- Respond to Webhook — returns the response to the user.

📦 Intermediate data and storage

- Supabase — a database where all news and videos are stored.

- Pinecone — vector storage that provides fast and smart search.

For this automation, you will need the following services:

- SupAbase https://supabase.com/dashboard/project/pspttgyqfviazhuxuaxg/settings/api

- Tavily AI https://app.tavily.com/home

- Lovable https://lovable.dev/

- Google Cloud Console - Youtube Data API https://console.cloud.google.com/marketplace/product/google/youtube.googleapis.com?q=search&referrer=search&authuser=1&inv=1&invt=AbwHvg&project=astute-expanse-457620-b7

- Pinecone https://app.pinecone.io/

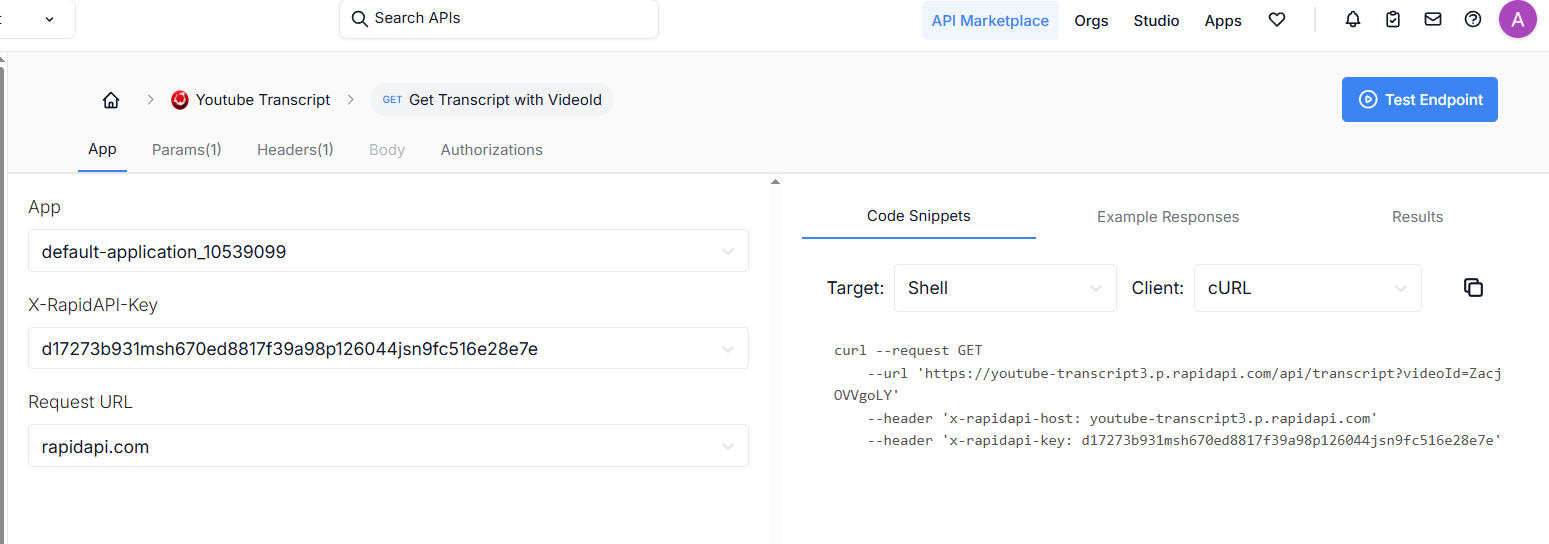

- RapidAPI https://rapidapi.com/solid-api-solid-api-default/api/youtube-transcript3/playground/apiendpoint_b46d1962-a219-453c-afd6-b94a336a61ae

First of all, install the API keys and connect your credentials in OpenAI

Next, connect your credentials to the Pinecone vector store

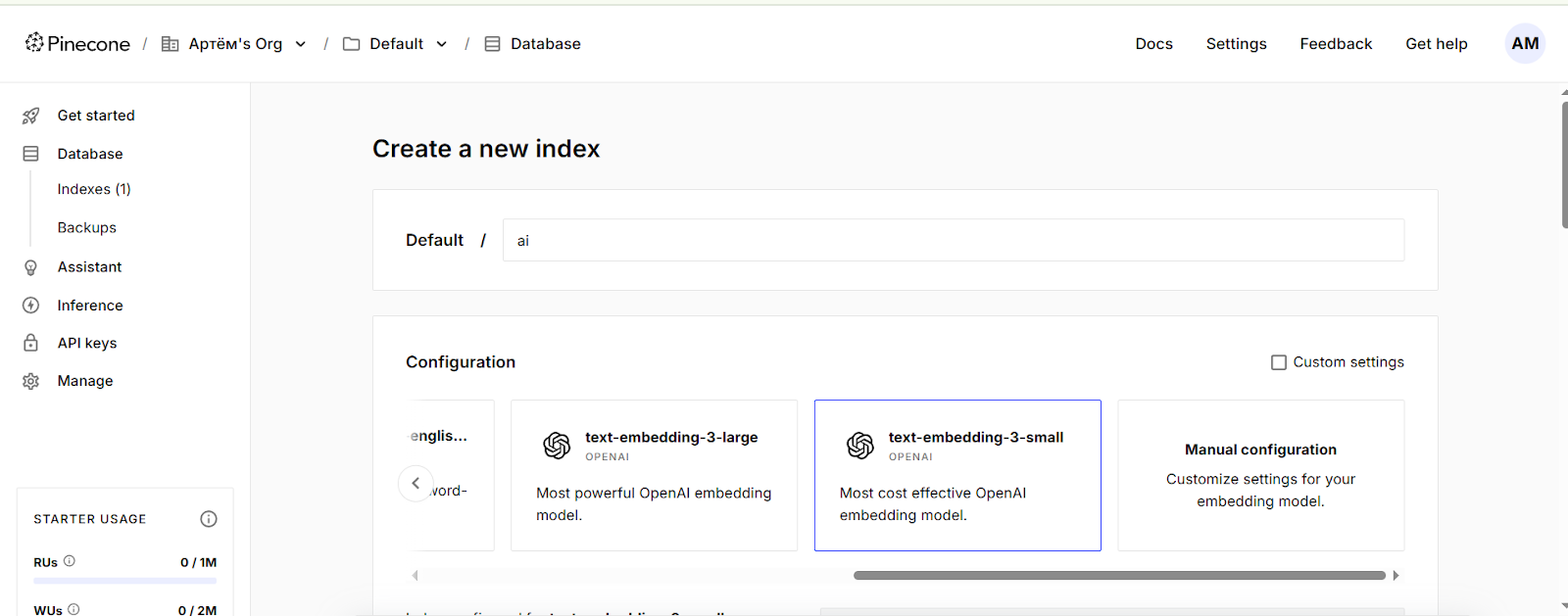

To do this, go to the indexes section and click Create index, then give a name and select in configuration: text-embedding-3-small

Then go to the section: API keys and create a new API key

And then paste the api key into n8n

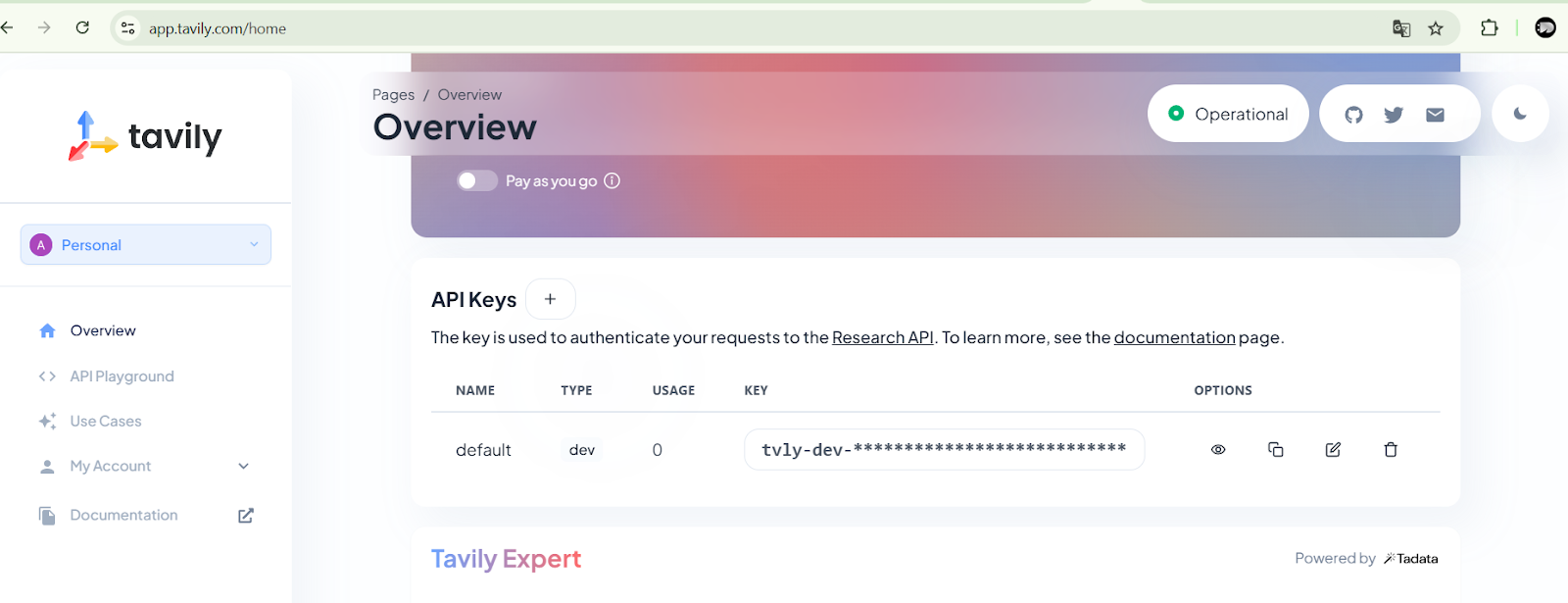

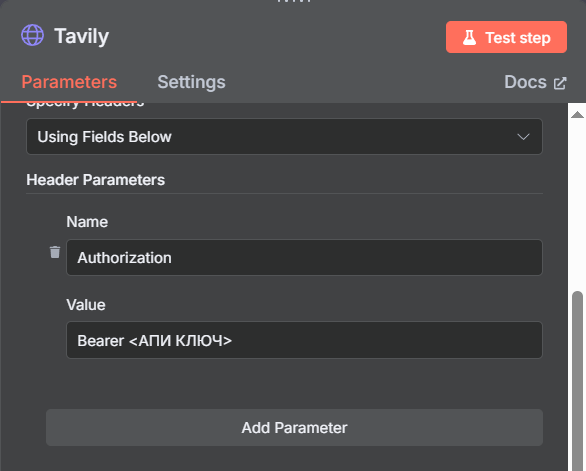

Next, connect your API key to Tavily

https://app.tavily.com/home

And paste to the right of the word Bearer

In the Get 1 Video and Get Channel ID nodes, connect your OAuth credentials that we used to connect Google Drive in past automations. Just before that, go to https://console.cloud.google.com/marketplace/product/google/youtube.googleapis.com?q=search&referrer=search&authuser=1&inv=1&invt=AbwHvg&project=astute-expanse-457620-b7 and click Enable API to activate the YouTube API.

Now, in the Transcribe node, connect the API key from the RapidAPI service

You will see a page like this

Here, copy and paste data from 'x-rapidapi-host: and 'x-rapidapi-key into n8n:

Next, connect Supabase

Go and sign in to your account https://supabase.com/dashboard/

Create a new organization and a new project

Then go to the SQL Editor section

And then enter the following commands:

CREATE TABLE public.news (

id UUID PRIMARY KEY DEFAULT gen_random_uuid (),

title TEXT NOT NULL,

content TEXT NOT NULL,

type TEXT DEFAULT 'general',

created_at TIMESTAMP WITH TIME ZONE NOT NULL DEFAULT now ()

);

CREATE TABLE public.completed (

id UUID PRIMARY KEY DEFAULT gen_random_uuid (),

title TEXT NOT NULL,

content TEXT NOT NULL,

type TEXT DEFAULT 'general',

created_at TIMESTAMP WITH TIME ZONE NOT NULL DEFAULT now ()

);

CREATE TABLE public.webhooks (

id UUID PRIMARY KEY DEFAULT gen_random_uuid (),

name TEXT NOT NULL,

webhook_url TEXT NOT NULL,

created_at TIMESTAMP WITH TIME ZONE NOT NULL DEFAULT now ()

);

CREATE TABLE public.chat_options (

id UUID PRIMARY KEY DEFAULT gen_random_uuid (),

name TEXT NOT NULL,

chat_text TEXT NOT NULL,

chat_query TEXT NOT NULL,

created_at TIMESTAMP WITH TIME ZONE NOT NULL DEFAULT now ()

);

CREATE OR REPLACE FUNCTION public.delete_news_item (item_id uuid)

RETURNS boolean

LANGUAGE plpgsql

SECURITY DEFINER

AS $function$

DECLARE

deleted_count INTEGER;

BEGIN

DELETE FROM public.news

WHERE id = item_id

RETURNING 1 INTO deleted_count;

-- Return true if one or more rows were deleted

RETURN COALESCE (deleted_count, 0) > 0;

END;

$function$;

CREATE OR REPLACE FUNCTION delete_rows ()

RETURNS BOOLEAN AS $function$

DECLARE

deleted_count INTEGER;

BEGIN

-- Example deletion (replace with your actual DELETE statement)

DELETE FROM some_table WHERE some_condition;

GET DIAGNOSTICS deleted_count = ROW_COUNT;

-- Return true if one or more rows were deleted

RETURN COALESCE (deleted_count, 0) > 0;

END;

$function$ LANGUAGE plpgsql;

Now set up an external site where everything will be displayed

Go to https://lovable.dev/projects/ed99ca42-7ba8-46ef-835e-ee12a96f5ab8

And click the Remix it button at the top right

Click on the green SupAbase icon

And connect your Supabase account

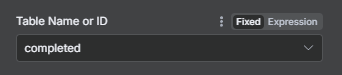

Also, in n8n in the SupAbase node, make sure that you have the completed table in the Table Name or ID section. After automation is triggered, data will be downloaded and displayed on the site.

You can also customize the topics you need to search for news and videos in these two nodes:

It's done.

Automation now works in conjunction with your site and publishes the news feed right there.

You can always get a JSON file and instructions in video format by joining our unique Automation club.